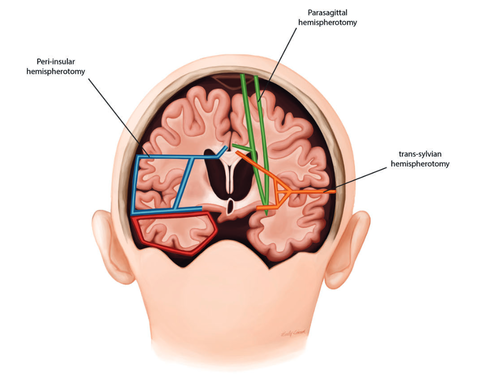

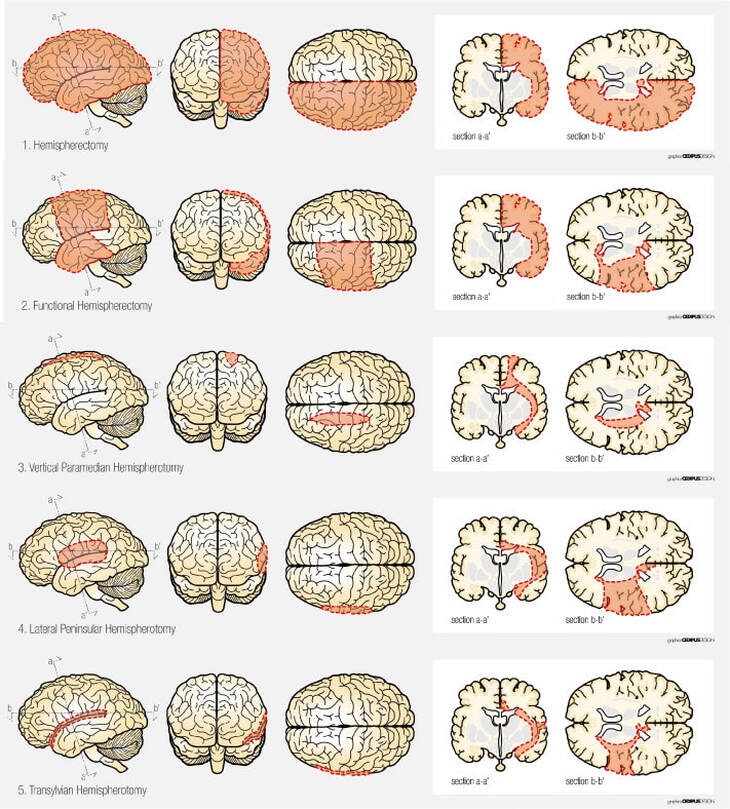

Hemispherotomy = Hemispherotomy? Typology of different functional disconnection procedures1/18/2024  Nurida Boddenberg Hemispherotomy, a medical procedure performed in severe epilepsy cases, has sparked interesting debates surrounding unusual or borderline consciousness. Its outcomes are often associated with an anatomically preserved cerebral hemisphere that is, however, devoid of any functional connection to the rest of the body or the brain. Still, the isolated hemisphere is sometimes physiologically intact and allows for electrophysiological studies (Hawasli 2017), which has provoked (Baynes, Seth, Massimini 2020) to propose the idea of an 'island of awareness' in this context. However, the actual outcome of the hemispheric-resection procedure varies, which could also possibly change and impact how our islands of awareness might look, with implications for the consciousness debate. The objective of this post is to clarify the various procedures that are referred to under the term "hemispherotomy". Figure 1: A schematic representation of some of the common hemispherotomy procedures. Peri-insular hemispherotomy (blue), sometimes additionally in this procedure the temporal lobe (red) is resected. Trans-sylvian hemispherotomy (orange) and parasagittal hemispherotomy (green) Picture from Cohen, A. R (2016): Section VIII: Epilepsy and Functional Disorders. In: Pedriatic Neurosurgery The controlled resection of hemispheres started in the 1920s with the complete removal of one hemisphere – anatomic hemispherectomy (Dandy). However, advances in neurosurgery after the 1970s led to less invasive techniques such as hemidecortication (Ignelzi and Bucy) and functional hemispherectomy (Rasmussen), which prioritize maintaining more anatomical integrity while achieving the desired functional disconnection. Still, much of the cerebral material is removed, which reduced the chances of conscious ongoings in this hemisphere. Then, in the 1990s, a refined set of techniques emerged under the term `hemispherotomy,' aiming to isolate the affected brain hemisphere functionally but with even less tissue removal. This procedure reduced risks like blood loss and infection but raised concerns about incomplete disconnections and the potential need for a second surgery. (See Figure 1 for a comparison of the extent of disconnection) The choice of specific hemispherotomy technique is a critical decision influenced by various factors, such as the tools available, the surgeon's expertise, and the patient's specific pathology, each bearing distinct intricacies that can influence the postoperative structure and affect the likelihood of disconnection. The vertical parasagittal hemispherotomy (Delalande) utilizes a vertical incision near the brain's midline to access the corpus callosum and white matter tracts for disconnection, ideal for cases of cerebral hemispheric atrophy. In contrast, the peri-insular hemispherotomy (Villemure and Daniel) uses a lateral approach to disconnect the affected hemisphere, providing the shortest working corridor and preserving deep structures like the thalamus, midbrain, basal ganglia, cerebral arteries, and cranial nerves. Further, the lateral transsylvian (keyhole) approach (Schramm) is a variation of the peri-insular technique. It navigates through the Sylvian fissure to excise parts of the inner temporal lobe, proceeding with disconnections at the frontal lobe, through the corpus callosum, and extending to the parietal and occipital regions, suited for patients with large cysts between the frontal and temporal lobe. However, both lateral hemispherotomy methods used to lead to quicker seizure recurrence and higher odds of seizure return compared to vertical techniques, possibly due to a less thorough disconnection., which could result in residual sensory input or motor afferences, thereby affecting potential `awareness’ in the isolated hemisphere. Endoscopic hemispherotomy (Chandra and Sood) marks a significant milestone in minimally invasive neurosurgery. This technique employs endoscopic instruments to perform the procedure through smaller openings, aiming to minimize complications (e.g., blood loss) while striving to preserve its efficacy. However, if brain structures lie deep and parallel to the plane of disconnection, they are more prone to be missed, which might lead to persistent or recurrent seizures and less disconnected paths. However, one may note that after hemispherotomy the isolated hemisphere could shrink, especially if certain blood vessels are tied off during the procedure, with long-term results quite similar to those of an anatomical hemispherectomy. In conclusion, while hemispherotomy is a critical intervention for those suffering from severe epilepsy, it also has the potential to offer a unique window into understanding consciousness. By revisiting the initial question, we recognize that hemispherotomy isn't just about disconnecting a dysfunctional hemisphere; it's manifold of intricacies can provide insights how isolated brain regions can function.  Figure 2: Schematic view of surgical techniques based on removal and/or disconnection. 1) (Anatomical) hemispherectomy, 2) functional hemispherectomy, 3) vertical hemispherotomy, 4) peri-insular hemispherotomy, 5) trans-sylvian functional hemispherectomy Picture from Marras, C.E et al. (2010). Hemispherotomy and functional hemispherectomy: Indications and outcomes. Epilepsy Research, 89(1), pp. 104-112

0 Comments

Uwe Peters Since many people have recently been impressed with the capacity of OpenAI’s ChatGPT-4, a collaborator (Ushnish Sengupta) and I decided to ask the LLM what necessary and sufficient criteria it would use to tell with certainty whether or not a brain organoid or an LLM such as itself is phenomenally conscious. Here’s what it said___ “Phenomenal consciousness refers to the subjective experience, the "what it feels like" aspect of consciousness. It is often referred to as qualia, the singular, individual instances of conscious experience. The challenge of quantifying phenomenal consciousness lies in its inherently subjective nature. However, we can approach this from different angles, attempting to derive quantifiable metrics based on observable behavior or measurable neurological activity. Here are a few speculative criteria:

These criteria are, of course, not without problems. For example, they may risk over-attributing consciousness to systems that are merely complex or adaptive without being conscious. Moreover, they don't directly address the hard problem of consciousness - the question of why and how these physical processes should give rise to subjective experience. Nonetheless, they may provide a starting point for empirical investigations into the nature and extent of phenomenal consciousness.” Charlotte Gauvry

When it comes to people in a coma, under anesthesia, in a fetal state, or when we consider invertebrate animals, brain organisms, or advanced AI systems, we may be dealing with beings that are to some, potentially only minimal, extent conscious. They may only just about be conscious. We will therefore refer to these cases as instances of borderline consciousness. Investigating them is important, as legal protection (e.g., of brain organisms) or even life and death (life support withdrawal in comatose patients) may sometimes depend on whether someone or something is conscious. Our notion of 'borderline consciousness' here is related to but different from what Schwitzgebel (2021) calls instances of "in-between" mentality: “when it’s neither determinately true nor determinately false that experience is present.” Schwitzgebel aims to show that such a state of consciousness exists. This is challenging as we can't introspectively detect such states (if we could, they would be fully conscious). Schwitzgebel thus argues for borderline consciousness indirectly. He first holds that as far as the distribution of consciousness is concerned, we are faced with a situation where a choice needs to be made between four undesirable options: (1) only the human being is conscious, (2) everything is conscious (panpsychism), (3) there is a strict distinction between conscious and unconscious state, (4) we must accept what Schwitzgebel calls borderline consciousness. Based on common sense, (1) and (2) are easy to reject (almost everyone may accept that dogs are sentient but electrons aren't). (3) appears more robust. But different empirical arguments that are partly based on developmental and evolutionary theories can be mobilized to challenge it. Relying on these theories, it can be shown that consciousness doesn't arise from nowhere. Consequently, there is no “line in the sand” between unconscious and conscious states, between the fetal and infant states, or between different stages of the evolutionary lineage of human beings. Moreover, all the physiological criteria traditionally used to measure consciousness (e.g., activation of a global network, attention to representations of intermediate order, phi (IIT), etc.) appear to be gradual, suggesting that there is no clear boundary between conscious and non-conscious states. Hence, there are good intuitive and empirical reasons to accept (4). Our project is related to Schwitzgebel's notion of "in-between consciousness". This is because we will primarily focus on cases in which consciousness may be so minimally present that it is challenging to determine whether it is present at all. The potentially unusual kind of minimal consciousness that we here call 'borderline consciousness ' (e.g., in brain organoids) may well turn out to be "in-between consciousness". But this remains to be seen. The key objectives of the project are to investigate ways of finding out (a) whether, for example, brain organoids or sophisticated AI systems are conscious at all, (b) what this consciousness might consist of (e.g., sentience, valenced experience, self-awareness, etc.), and (c) what ethical and epistemic risks may arise in the science of consciousness related to these borderline cases. |

Proudly powered by Weebly

RSS Feed

RSS Feed